by Darius Kazemi, Dec 31, 2019

I wrote this article in mid-2018. It was meant to be a chapter in a book about the role of the bot in American political discourse that I never found a publisher for.

It's 18 months later and "bot" and "paid bot" are still thrown around as pejoratives in political discourse, and yet its definition in this context remains slippery.

Hamilton 68, the propaganda bot activity dashboard site that I critique at the end of the essay, is still being cited and misused by sources like NBC News. Increasingly it is used on Twitter by conspiracy theorists as support for literally any claim they want to make.

Anyway, I thought that with the 2020 US Presidential elections coming up, this piece deserved to see the light of day.

In 2015 "bot" was the hot buzzword in Silicon Valley. Huge tech companies like Slack and Facebook raced to support bots on their platforms. Smaller companies rushed to release bots that their customers could talk to like friends. If you wanted millions in funding, you had to say "we support bots" somewhere in your venture capital pitch (here's one example—the article talks about "the age of bots"). Headlines breathlessly declared that bots are the new apps.

But the 2016 election changed all that. We've gone from bot fever to a bot scare. Here's a sampling of headlines from major news organizations:

"The Fake Americans Russia Created to Influence the Election" - September 7, 2017, The New York Times

"Russian trolls and bots disrupting US democracy via Facebook and Twitter" - October 31, 2017, Fox News

"'Bots' may be involved in Virginia governor's race" - November 4, 2017, The Washington Post (this headline was later changed to "Did bots inflame online anger over controversial ad in Va. governor's race?")

"Russian bots promote guns after Florida shooting" - February 16 2018, CNN

"Russian bots behind '4,000% rise' in spread of lies after Salisbury and Syria attacks - Govt analysis" - April 19, 2018, Sky News

"Russian 'bot': 'I have no Kremlin contacts'" - April 20, 2018, Sky News

Bots have been around since at least 1963 when an automated backup program was created for the IBM 7094 computer at MIT by Professor Fernando Corbato. His team called it a "daemon", after both Maxwell's Demon and Socrates' "daemon", a non-human, muse-like creature he claimed lived more or less on his shoulder dispensing advice.

The word "bot" started to replace the word "daemon" with the advent of the internet. In late 1989 (two years before the first web page went live!) a group of researchers at British Telecom created Knowbot. Knowbot was like a proto-Facebook: it crawled the internet to find people's public user information and compile it all into a searchable directory. A physicist in Switzerland could find the phone number of a computer scientist in the United States by providing the name of the scientist's school and a partial spelling of his name.

Around the same time Knowbot was created, Internet Relay Chat (IRC) came into vogue. IRC is a protocol, or set of rules, that defines a kind of chat room and messaging service. IRC was the backbone of social software for decades and is still in use today. In addition to relaying messages between humans, IRC could relay messages between bots. By 1991, bots were a common sight in IRC chat rooms. These bots were more like modern social media bots: little programs that interacted with you as though they were another person. They would spout Monty Python quotes or look up dictionary definitions or play simple games.

At this point, the World Wide Web was just starting to come into existence, at which point, "spiders" or "web crawlers" started automatically cataloging every web page they could find, mostly by following links upon links upon links. These catalogs became the basis of the world's first web directories and search engines.

When we talk about a web crawler being a bot, it's just a process that's running on its own and needs very little maintenance. That's not quite what we mean when we say "bot" in 2018.

I have my definition for the kind of bots that I make and the kind of bots I'm talking about here, which is that a bot is a computer that attempts to talk to humans through technology that was designed for humans to talk to humans. If you get a robocall from a political candidate, that's a bot, because phone calls were meant for humans to talk to each other. But if you get an error message on a screen, that's a computer communicating with you, because error messages are not designed for humans to talk to humans. So if a computer tries to talk to you on instant messenger or on Twitter or through text messaging or on the phone, it would be a bot. Even if a computer physically mails you letters, that's a bot, just using a very old technology called "mail."

But just because technical people might agree on what a bot is doesn't mean that the public uses the word in the same way.

If you look at any popular political tweet or Facebook post about United States national politics on any end of the political spectrum, you're bound to find among the replies statements like, "This guy's a bot. Don't listen to him."

What does it mean when someone claims that a celebrity who has been interviewed on TV is a bot?

On one level, bot has become a shorthand for fake, as in "fake news." And if bot just means fake, then the bot in question doesn't have to even be a computer controlled social media account. It could also be someone who sits in a call center and works for some political group and astroturfs political campaigns. This really happens, like in the case of the Internet Research Agency (IRA), revealed in 2015 to the world by reporter Adrian Chen at The New York Times. The IRA employed 400 to 500 employees at the time whose duties ranged from posting short comments on news articles expressing pre-approved opinions to a small "elite team" of writers who created a handful of believable online personas that pretended to be real people, occasionally slipping in a piece of pro-Russian propaganda. The IRA went so far as to encourage Americans to host real-world rallies for various political causes, going so far as to provide light financial backing. Special Counsel Robert Mueller indicted the IRA along with 13 Russian citizens as part of his ongoing investigation into ties between the Trump campaign and the Russian government.

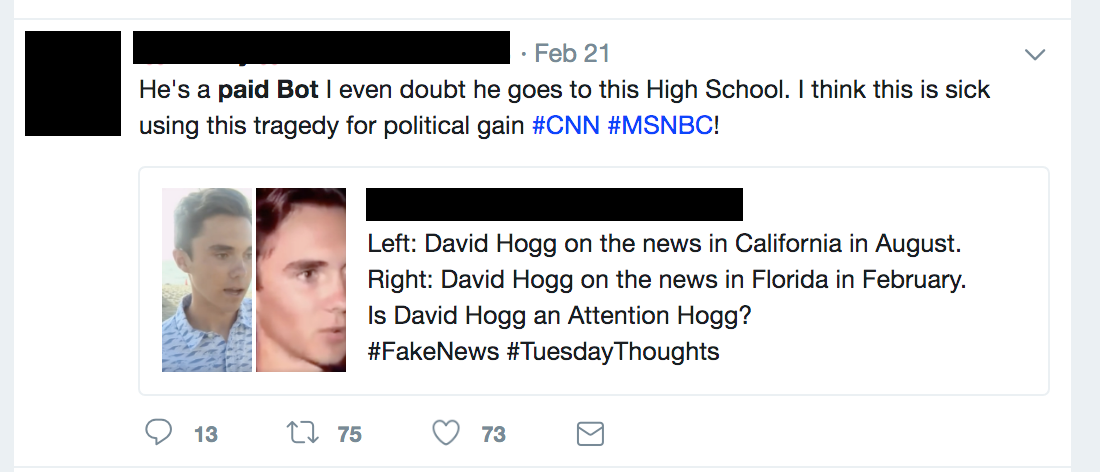

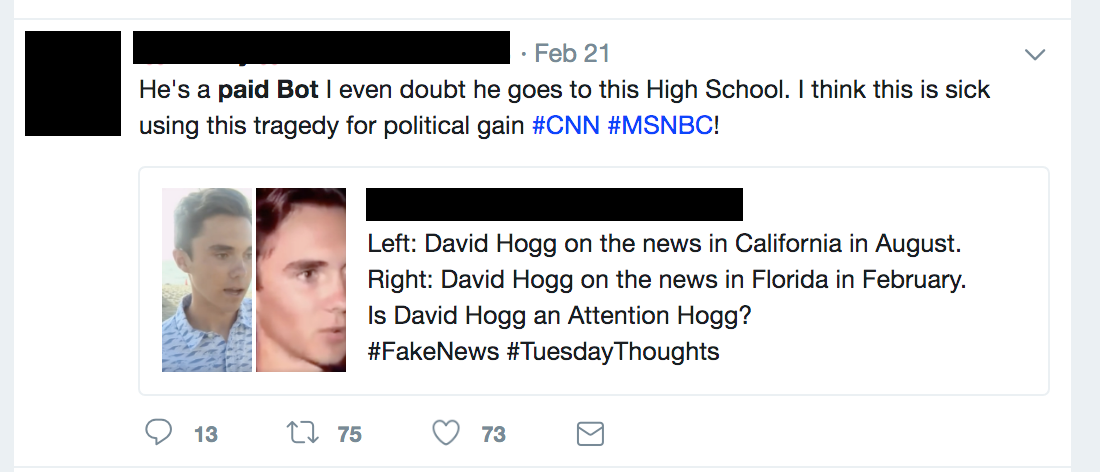

Technically the people who work at the IRA are not bots, but rather professional "trolls" -- people who post online in order to create discord, anger, and confusion. But the usage of the term "bot" has slipped to the point where someone like David Hogg, a student-turned-activist who survived the Parkland school shooting, can be referred to by his critics as a "paid bot".

It is easy to claim that anyone whose politics you dislike is a paid agent of a shadowy organization, which leads to our next usage of the word "bot".

If you spend time reading Twitter search results for the word, it becomes clear that oftentimes "bot" simply means "a person who holds beliefs that I disagree with." Perhaps this comes from the idea that people with unsavory ideas must be gullible or uneducated fools who are unable to think for themselves. In this sense, it's easy to see why a person could be labeled as having "robotic thinking", which is easily shortened to bot.

So a "Russian bot" is someone slavishly devoted to the ideology of the Russian government. Or maybe someone who is so obsessed with Russian bots that they are distracted from "real" issues. Those real Americans who held rallies on behalf of the IRA? People call them bots too, because they were unwitting dupes of Russian misinformation campaigns.

But of course these people aren't bots, not in the technical sense. They're humans. They are people who saw what many consider to be a misinformation campaign from a foreign government and said, "I like this information and I'm going to pass this on to other people in my capacity as a person." That's critical thinking and political decision making. We may not like the result of the critical thinking, and it may not even be a correct result, but it's not slavish adherence to a pervasive propaganda message, either.

It's one syllable. It's three letters long. It conjures images of Russians nefariously infiltrating our daily lives, even infiltrating the highest echelons of our society.

I am, of course, talking about the word "red," as in the Red Scare.

In modern America, "red" is almost exclusively used as a pejorative in political contexts, but it wasn't always that way. It began as a simple descriptive word. For many years before it was associated with communism and socialism, a red flag was used as a symbol of dissent and fighting spirit; sort of the opposite of the white flag of surrender. On March 18, 1871, a group of working class radical socialists seized control of Paris, raising a red flag over their temporary headquarters at the Hôtel de Ville. From that moment on, the color red was indelibly linked to socialist causes.

For years after that, red was used by socialists and communists to describe themselves, but rarely if ever by the American press. On the rare occasions a term like "red menace" was used by the press, it was to mock the people using this terminology as paranoid cranks. Take for example this November 1911 editorial in The San Francisco Call:

If there is any anti-suffrage movement in Los Angeles it is housed and operating far underground. The league of "lame ducks" has ceased quacking. The very people who were out talking and working against suffrage up to poll closing time on October 10 are now exhorting and beseeching enfranchised woman [sic] to register and vote to save the city from the red menace of socialism.

The editors mock people who opposed the right of women to vote, calling them "lame ducks" and observing that they've shifted gears to the next big issue: fighting the "red menace."

But this kind of language, even jokingly, was rarely used at all by the American press until the Bolshevik Revolution in 1917, when it took a sudden and serious turn.

(Above data gathered by the author from American newspaper databases.)

By 1919 we were in the middle of what historians call The First Red Scare, a precursor to its more famous 1950s Cold War incarnation. Newspapers reported on troves of "red literature" being found in the possessions of anarchist revolutionaries. Labor unions on strike were reported as "armed reds." U.S. Senators like Indiana Republican James Eli Watson were denouncing "Socialists, reds and other radicals" embedded in organizations like the recently-formed Federal Trade Commission (Watson was angry that they were curtailing the rights of the American Manufacturers Association, his former employer).

The media often played a role in amplifying the use of "red" as a pejorative term. Philadephia's Evening Public Ledger reported on the January 1919 inaugural address of PA Governor William Sproul with the headline, "GOVERNOR WARNS OF 'RED' MENACE". However, the transcript of Sproul's address shows no use of the word "red." He does refer to Bolshevism as a "menace," but "red" seems to be the invention of the newspaper.

Inventions aside, it's worth looking at what Sproul was saying during his address. He was considered a centrist Republican for his time. He was anti-labor and pro-business, often breaking worker strikes with lethal force. But he was also pro-environment, founding the state's Arbor Day, and was a staunch supporter of women's suffrage, which became U.S. Constitutional law during his tenure as governor. Speaking of Bolshevik Communism, he said:

The antidote for this social infection, as we shall apply it in Pennsylvania, is good public administration [...] Our people want no mongrel government, devised by fanatics foreign to us in speech, in vision and in purpose, without tradition and without faith, envious of our national strength and prosperity and anxious to disrupt us as a nation and paralyze us as a people. We want to develop our own Democracy, made in America, for America's needs and America's great destiny. We will not give this splendid Republic away to its enemies.

This reads almost identically to modern-day reactions to the perceived influence of Russian social media bots from both politicians and newspapers.

Compare that 1919 speech to these words from New York Times columnist Thomas Friedman in February 2018:

Our democracy is in serious danger. [...] Our F.B.I., C.I.A. and N.S.A., working with the special counsel, have done us amazingly proud. They’ve uncovered a Russian program to [...] poison American politics, to spread fake news, to help elect a chaos candidate, all in order to weaken our democracy. [...] The biggest threat to the integrity of our democracy today is in the Oval Office.

Not long after the Friedman piece was published, my own local alt-weekly newspaper, Portland Oregon's Willamette Week, debuted a chart-heavy scare piece titled "On Twitter, Oregon’s Top Officials Are Swarmed by Bots". They used a third-party tool of admittedly dubious accuracy to estimate the number of "fake followers" that various local politicians had on their Twitter accounts. The numbers were truly scary: 63% of Governor Kate Brown's Twitter followers were fake!

Yet the paper failed to acknowledge basic points about the "follower economy" on Twitter. When you hit a certain level of popularity with any Twitter account, you become a target for people who try to make money selling fake followers. If I were to set up such a network today, I would want these fake followers to be plausible, because the more plausible the fake account, the less likely it will be banned by Twitter. So the first thing I would do is pick a location for the bot to "live" -- if that's in Oregon, then I will instruct the bot to follow popular Oregon politicians and news outlets. The reason we do this is simple: real humans tend to follow local politicians and news outlets too.

A complicating factor for politicians is that they are public servants, and many of them see their social media accounts as a channel of information to the public. Unlike private citizens, politicians often have policies that they do not block accounts from seeing or following their profiles, in case that account is a constituent who uses social media to get information from their representative in government.

So Willamette Week published insinuations about local politicians based on a wildly inaccurate third-party tool. What's funny is if we hold their own Twitter account to the same standards, we find that 42% of their followers are "fake", a percentage comparable to the politicians themselves!

What is less funny is that this article prompted Portland Mayor Ted Wheeler to write a letter to Rod Rosenstein, Deputy Attorney General for the United States Department of Justice and key figure in the Trump-Russia investigations. This letter cited the Willamette Week article, raised concern about "automated, nefarious activity aimed at official City online accounts," and claims "it may be likely that Portland Twitter and Facebook accounts [...] are being used by foreign conspirators in an attempt to sow political discord into our local politics in Portland." Wheeler closed the letter asking for a federal investigation into foreign interference in local Portland politics.

The Department of Justice has yet to respond.

Almost every article in the media about bots influencing politics in America cites the same, single source for its data: a publicly-available information dashboard called Hamilton 68. Named for Alexander Hamilton's 1788 essay extolling the virtues of the Electoral College system over a direct popular election, the dashboard is an easy-to-read web page that serves as a kind of weather report "tracking Russian influence operations on Twitter," according to their tagline.

Hamilton 68 is run by the Alliance for Securing Democracy, a program funded through the German Marshall Fund after the 2016 election to investigate foreign election influence. Their premier output so far has been the Hamilton 68 dashboard and its German counterpart, the Artikel 38 dashboard.

To their credit, the Alliance has published fairly detailed methodology for Hamilton 68. They analyze 600 social media accounts that fall into one of three categories: accounts known to represent the Russian state, accounts that are patriotic and pro-Russia, and accounts that follow the previous two accounts and boost their messages. The dashboard itself lists trending hashtags and topics of conversation among those 600 accounts.

But they do not tell us what the accounts are. This is understandable, as it could affect their data collection. But they also don't specify how many of those 600 accounts are Russian-state-backed newspapers like RT (Russia Today), how many are probably real people, and how many are bots. And finally, 600 accounts is a pitiably small number of accounts to track on a social media platform like Twitter with well over 300 million monthly active users.

In April 2018 the Alliance even went on the record calling the media's interpretation of their data inaccurate. Bret Schafer, their communication director, told The Daily Caller News Foundation that, “[We] don’t track bots, or, more specifically, bots are only a small portion of the network that we monitor,” Schafer said. “We’ve tried to make this point clear in all our published reporting, yet most of the third party reporting on the dashboard continues to appear with some variation of the headline ‘Russian bots are pushing X…’” he said. “This is inherently inaccurate.”

Hamilton 68 only claims to track "the content and themes being promoted by Russian influencers online." If you take the data that Hamilton 68 provides, it is simply irresponsible journalism to make really any claims at all. The dashboard provides a top ten "Trending Topics", which are topics that have shown a large percentage increase in discussion in the last 48 hours. The ten topics almost always have at least five topics in common with English-speaking Twitter as a whole, and are entirely what you would expect to get if you measured any group of politically-engaged English speaking Twitter accounts at all.

It's clear upon inspection that the media narrative about an influx of Russian or otherwise foreign bots influencing politics in America is built on flimsy data and enormous leaps of logic. Further, the narrative empowers conspiracy theorists to make essentially whatever claims they want about anyone. The bots that do exist are drops of water in the ocean of social media, but I believe that the effect of constant front-page news stirring up fear about foreign influence can have far-reaching negative effects on any democracy.

Darius Kazemi is a 2018-2019 Mozilla Fellow who lives in Portland, Oregon, USA. If newspaper mentions and academic paper citations are to be believed, he is one of the world's foremost experts on bots. He makes weird internet art, writes open source software, gives talks, wrote a book about niche videogame history, is very serious about karaoke, and is blogging about the first 365 IETF Request For Comments (RFC) documents.

You can support his work on Patreon, which includes essays like this one, history blogs, open source social media software, fun internet toys, and more.